Deep Fusion: iPhone 11 Camera AI Software

Apple has a new image processing technique for the iPhone 11, 11 Pro and 11 Pro Max. This is a new AI software and it’s called Deep Fake… no no, Deep Fusion. Because it’s no longer enough to be pro, you now also have to be deep.

Deep Fusion now available as part of both the developers beta and public beta of iOS 13.2. Unfortunately, it will only work with with iOS devices running an A13 Bionic processor, enabled by the processor’s Neural Engine.

“Next-generation Smart HDR uses advanced machine learning to capture more natural-looking images with beautiful highlight and shadow detail on the subject and in the background” Apple

Apple also say the new software “uses advanced machine learning to do pixel-by-pixel processing of photos, optimizing for texture, details and noise in every part of the photo.”

What does Deep Fusion do?

This new camera AI essentially improves further the iPhone’s ability to capture detail when taking photos. We have already talked about an iPhone 11 feature called Semantic Rendering. This software process allows the iPhone camera sensor to “see” the difference between humans and other objects. The software then tweaks what it believes is the person in the photo, sharpening hair detail for example.

Added to Semantic Rendering, iPhone 11 already has Smart HDR installed. This software takes a series of images instead of one and blends them together. Thus the dynamic range and detail in the image are improved still further.

And that’s not all. There’s also Night Mode, which has a dramatic effect in low light situations. In this mode, your camera can almost see in the dark.

Now, add to all this Deep Fusion. Whereas Night Mode is for low-to-very-low light conditions, Deep Fusion is for average light-to-low light conditions (ie not too bright and not too dark). In other words, we are talking about indoor situations.

In such situations, the new software will switch the camera automatically into the mode to lower image noise and optimize detail. But unlike HDR, this new software works at pixel level. For example, if you are using a telephoto lens on your iPhone 11, Deep Fusion will kick in in all conditions except very light or very bright.

How does Deep Fusion Work?

When you see how much work is going on behind the scenes of your iPhone 11 Pro, it starts to sound a little crazy.

Like with Smart HDR, a series of images are captured rather than just one. There’s a reference photo which contains as little motion blur as possible. Now, 3 standard exposure and 1 long exposure photo are combined into 1 long exposure photo.

Deep Fusion then separates the reference photo and combined long photo into regions. This process identifies sky, walls, people, skin, textures and fine details (eg: hair). This is followed by the software analysing the photos, going pixel by pixel (up to 24 million pixels).

Finally, the software chooses which pixels to use to create the best, most detailed, least noisy image.

What does it look like?

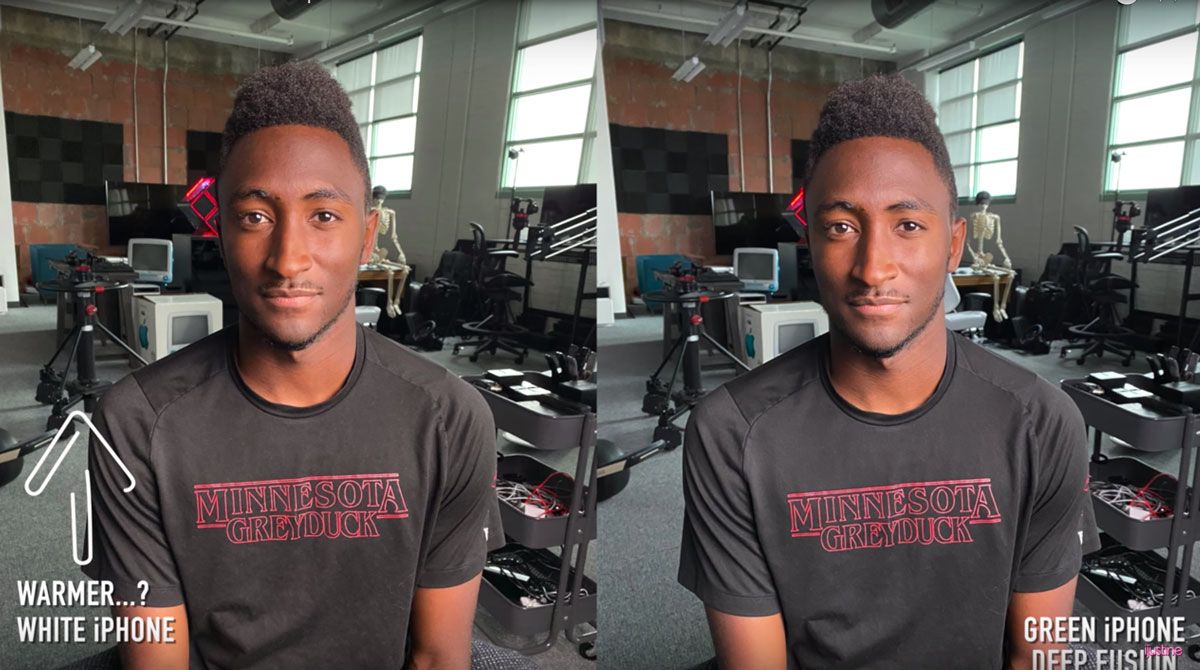

YouTubers iJustine and MKBHD have been testing it out. The difference is so subtle, they play a game using 2 iPhone 11s to see if they can guess which one is using Deep Fusion. Although you can see a difference, it’s really fine adjustments.

Of course, taking a photo and then uploading it to YouTube will result in some of those details being compressed out. And here I’ve made a screengrab, which will get compressed even further.

Can you spot which camera is using Deep Fusion?

The answer is that the right image is taken using the new software. One thing I would say, aside from the extra detail (not too visible) is that the skin tone colour on the right is more natural and even. I would say the photo on the right looks less “digital” or less like its had saturation boosted to compensate for the iPhone’s small sensor.

For years now, smartphone photographers have used Instagram-style filters to boost the attractiveness of their images. Indeed, instagram introduced these features as a way to compensate for the lack of quality in smartphone photos. For that reason, we’re now used to seeing highly saturated and high contrast images.

Of course, this also helps when you are viewing photos on your small smartphone screen. However, Deep Fusion (in combo with the other processes) is gradually removing that low quality. Although this update might appear subtle, it’s yet another step along the path.

Eager to learn more?

Join our weekly newsletter featuring inspiring stories, no-budget filmmaking tips and comprehensive equipment reviews to help you turn your film projects into reality!

Simon Horrocks

Simon Horrocks is a screenwriter & filmmaker. His debut feature THIRD CONTACT was shot on a consumer camcorder and premiered at the BFI IMAX in 2013. His shot-on-smartphones sci-fi series SILENT EYE featured on Amazon Prime. He now runs a popular Patreon page which offers online courses for beginners, customised tips and more: www.patreon.com/SilentEye