Please Fix This One Thing: 12 Months Filming – iPhone vs Android

I’ve been using these 2 smartphones to shoot video for a whole year. That’s plenty of time to compare them for shooting video – iOS vs Android, iPhone vs Samsung. And really there’s just this one feature which has me asking, “Why Apple, why?”

But there are pros and cons to every system. So, in this article, I’ll go over what I like and what I don’t like about iOS and Android. I’ve been comparing the 2 systems over the last 12 months, as I use them in my daily work, and this is what I found out.

iPhone vs Android: 12 Months Filming

Up until 2021 I was mainly a Samsung user. I’ve shot movies with the S8 and the S9, then more recently I upgraded to the Note 20 Ultra. Last February, I bought my first iPhone – the 12 Pro Max. Reading all the online discussions wasn’t enough – I had to try them for myself.

Now, I use both these devices constantly for my video work, on the channel as well for other work. I’m not really biased towards one or the other and they’ve both had pretty rigorous testing.

In this article I will ask:

- Which of the two devices and systems are most reliable?

- Which of the 2 systems is easiest to use?

- What about compatibility with other systems and devices?

- Now that computational processes are playing a bigger and bigger role in how smartphone cameras work, which system handles it best?

And I’ll also talk about how things are looking for iOS and Android in 2022.

Reliability

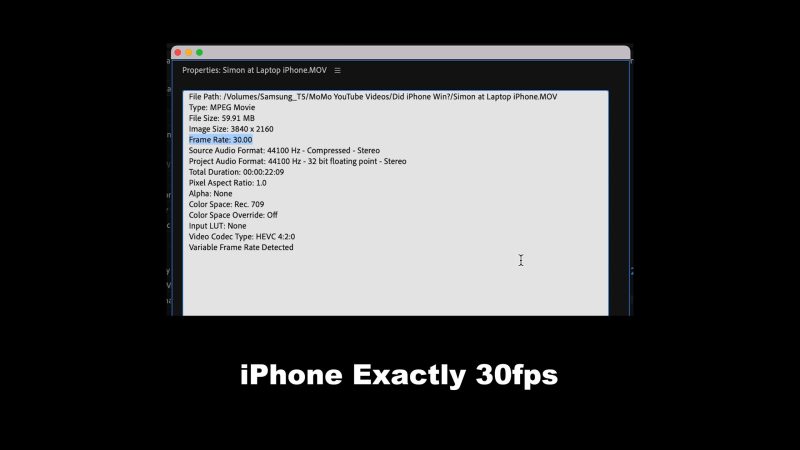

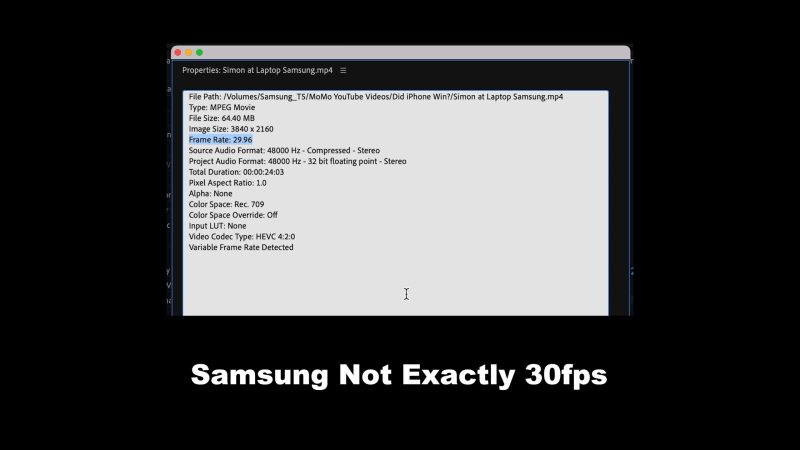

I find both devices to be pretty reliable. But one area where I notice a slight difference is in the accuracy of frame rates. Smartphones all record using a variable frame rate as part of the video compression.

But when the processor of your smartphone is pushed to the limit, you might see frame rates start to become less reliable. And when frame rates are less reliable, you might see your videos start to look a little less smooth. Especially when the camera is moving.

A Tale of 2 Chips

The processor in the iPhone 12 Pro Max is an Apple A14 Bionic, while this Samsung Note 20 Ultra has the Exynos 990. The new iPhones have the A15 Bionic.

Samsung devices such as the Note 20 ultra came in 2 varieties. The American version came with the Qualcomm chip, while the European came with the Exynos 990 chip, which is the one I have. The 990 chip was well known to have a weaker battery life, however the Exynos 2100 chip that came in the Samsung S21 matched the Qualcomm version more evenly.

From what I understand, the iPhone processor chips are somewhat more powerful than the Exynos or Qualcomm chips in most Android phones. And that includes the new Qualcomm Snapdragon 8 chip which is coming to new Android devices this year, which has been getting a lot of hype.

A more powerful processor means more reliable frame rates, especially when shooting at a higher quality. Like 60fps and 4K, for example. It also means I can happily shoot at 4K 60fps with my iPhone and it won’t overheat.

Again, when your smartphone overheats, it becomes less reliable when shooting video.

That said, I haven’t noticed a massive difference. But that might be partly because of the way I use these devices. I tend to shoot 60fps video with the iPhone more than the Samsung.

So I think I would give iPhone a small advantage in the reliability department. And looking ahead to 2022, it looks like this isn’t going to change in the near future. But here’s why I always use my Samsung for filming myself and for close up B roll shots.

Softer for Portraits

When I’m working on videos for this channel, I want to work as fast as I can but without sacrificing quality. For that reason, I often use the native camera and keep everything set to auto. If I’m demonstrating a bit of kit or filming myself, good auto focus saves me a lot of time.

If I film myself with iPhone, I find the result is usually less flattering somehow. The Samsung video quality is a little softer and gentler, with less aggressive tone mapping going on. Whereas iPhone tends to give you excellent detail, which is not always flattering.

For that reason, the Samsung is my go-to device for those tasks.

OK, let’s talk about ease of use.

Ease of Use

For ease of use, there’s things to look at like getting to camera settings, moving files around, as well as the compatibility of those files.

The Samsung has a button which takes you directly to video settings. There’s also Pro Mode, which I can get to very easily. Here’s a little tip to make that easier if you have a Samsung. In the more section you can find all these extra features, including Pro Video. You can add these to the main menu, so it is quicker to get to.

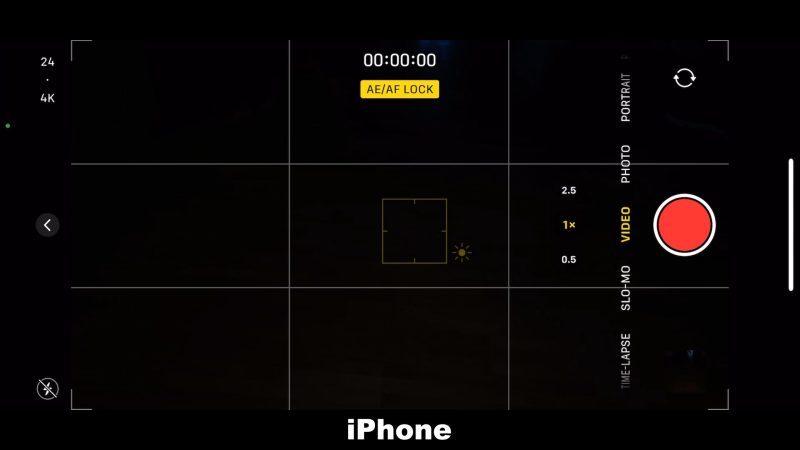

Just a swipe on the menu gets me to full manual control. iPhone is not so simple. We can switch frame rate and resolution in the top corner. For other settings, we need to switch out of the camera app then find the video settings, which sits within a menu, within a menu of settings.

Thing is, because iPhone doesn’t have manual settings natively, I don’t often need to go into camera settings. Rather, I will need to open a 3rd party app like FiLMiC Pro. If you have them both open, it’s not so hard to switch between 2 apps. But not quite as convenient as the Samsung.

For ease of use then, the Android edges it. But how well does each device work with other devices and software?

Compatibility

One of the things people love (or hate) about Apple is the enclosed system. iPhone isn’t my only Apple device. I also have the iPad Pro from 2020 and a MacBook Air, from last year, with the M1 chip. So getting my video files from iPhone to the other Apple devices is very easy using AirDrop.

When I send files from my Samsung to the MacBook Air, I either use Google Drive (which is pretty slow) or I use a cable and a little program called Android File Transfer, which works pretty smoothly.

One annoying thing is that my Samsung needs to allow permission each time and when you tap allow, Android File Transfer closes. So then you need to open it again to start moving files. It’s just a small thing, but when you are using your cameras every day, it’s sometimes these small things which get your attention. And recently I found the AFT app goes into a loop where it keeps asking you to allow access to the Samsung, which is really frustrating.

The smoother and sweeter everything works together, the happier the filmmaker. I believe there is a similar function to AirDrop on Android, but not sure how easy that is to use and which hardware it works with, if there is.

That’s the thing. I would probably have to spend some hours researching, with no guarantee it would work. But when you are invested in the Apple ecosystem, it’s simple. AirDrop – it works. Time saved.

Connection

Right here is where iPhone has a slight down thumb, as Apple insists on retaining the lightning port. This means I need a lighting cable for charging, for file transfers and for connecting microphones. Not so with the Note 20 Ultra, which uses the same USB C port everything else uses, including my iPad and my MacBook Air.

There’s a rumour Apple is going to get rid of the lightning port for the next iPhone, the 14. But rather than use a USB C, word on the street says they might switch to no port at all. Everything will then have to be wireless, including charging. We will have to wait until the end of the year to find out if that’s true.

(It’s probably not true)

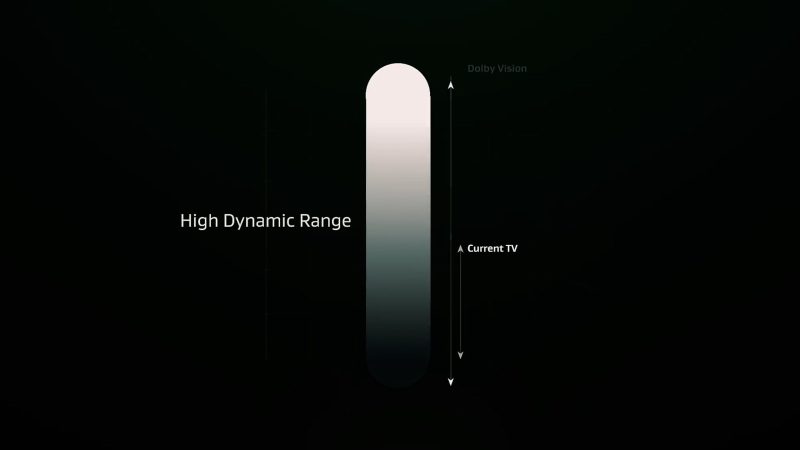

Computational Dynamic Range

Smartphones add extra dynamic range using computational methods such as HDR10+ or Dolby Vision which use dynamic metadata. Or there’s dynamic tone mapping which adjusts ISO differently for different parts of the image.

When it comes to HDR video, iPhone has Dolby Vision, Samsung (and other Androids) has HDR10+. Again, the Apple ecosystem makes using Dolby Vision much easier than HDR10+.

If you want to edit Dolby Vision video, LumaFusion or Apple’s native editing systems (iMovie and Final Cut Pro) are ready and relatively simple to set up. But Adobe still hasn’t got to grips with Dolby Vision or HDR10+.

I’ve already spent several hours trying to stop Premiere Pro mastering Dolby Vision without a red tint. Maybe I could eventually get it to work. But it all comes down to time again.

Apple supports not just shooting Dolby Vision but the whole process (as long as you don’t mind investing in the Apple system). With HDR10+, you’re on your own.

I think Dolby Vision looks nicer than HDR10+, as well.

On the other hand, smartphone filmmakers aren’t yet using Dolby Vision or HDR10+ that much. It’s still a little early for this technology. So, perhaps when it’s more commonly used, Adobe will get their act together and it will be easier to use outside of the Apple system.

Please Fix This One Thing, Apple

So now to the one iPhone thing I wish Apple would fix.

In all recent iPhones, since iPhone 7 I believe, Apple has implemented quite aggressive dynamic tone mapping.

Most of the time, you might not notice it. The problem arises in situations where you want to lock exposure, but you have high contrast within the frame, which is also moving during the shot.

If we have a dark scene and lock exposure because we want this scene to look dark. But as soon as we bring something, like my brightly lit hand into the shot, the whole frame adjusts exposure. It’s trying to even out the exposure across the frame, so it pushes up the dark areas to match the brightness of my hand.

Even if we switch to FiLMiC Pro, and lock exposure, Apple’s dynamic tone mapping overrides it. This is pretty much unusable in a professional filmmaking situation.

Android smartphones also do this. But, Samsung devices allow you to switch to Pro Video mode, which completely disables tone mapping. Then you get less dynamic range, but you get control of the exposure.

Why Apple doesn’t implement this, I don’t know. If they did, we could choose to use tone mapping when it helps us, and switch it off when it works against us.

My iPhone / Android Workflow

After a year of use, I’ve settled on a workflow which seems to be pretty efficient now. Essentially, I use my Samsung for talking to camera and equipment shots. For example, “how to” content, where I’m showing how stuff works.

But for stuff like filming with a gimbal, I use iPhone. The more reliable frame rate and the extra dynamic range, plus more support from the apps which come with the gimbals, delivers me some really nice quality video.

Turns out smartphones are like people, with strengths that complement each other when put together. Which is like a message to humanity, isn’t it? When we work together, we win together.

I think I might have a future hosting corporate team building events…

But seriously, if I had to choose one, I guess I would pick iPhone. As I’m invested in the Apple system, it makes life easier. Plus the Apple processors appear to be ahead of the game, right now.

Good news is, I don’t have to choose. And I’m eyeing up the S22 or maybe the Note 22 (if there is one) as an upgrade this year…

Smartphone Video – Beginner to Advanced

If you want to know more about smartphone filmmaking, my book Smartphone Videography – Beginners to Advanced is now available to download for members on Patreon. The book is 170 pages long and covers essential smartphone filmmaking topics:

Things like how to get the perfect exposure, when to use manual control, which codecs to use, HDR, how to use frame rates, lenses, shot types, stabilisation and much more. There’s also my Exploring the Film Look Guide as well as Smartphone Colour Grading.

Members can also access all 5 episodes of our smartphone shot Silent Eye series, with accompanying screenplays and making of podcasts. There’s other materials too and I will be adding more in the future.

If you want to join me there, follow this link.

Simon Horrocks

Simon Horrocks is a screenwriter & filmmaker. His debut feature THIRD CONTACT was shot on a consumer camcorder and premiered at the BFI IMAX in 2013. His shot-on-smartphones sci-fi series SILENT EYE featured on Amazon Prime. He now runs a popular Patreon page which offers online courses for beginners, customised tips and more: www.patreon.com/SilentEye